二进制安装 kubernetes v1.21.10 发表于 2024-09-03 | 更新于 2024-09-04

| 字数总计: 9.8k | 阅读时长: 51分钟

集群环境准备 主机规划

主机IP地址

主机名

主机配置

主机角色

软件列表

10.254.16.184

k8s-master1

2C4G

master、worker

kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、Containerd、runc

10.254.30.124

k8s-master2

2C4G

master、worker

kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、Containerd、runc

10.254.18.125

k8s-master3

2C4G

master、worker

kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、Containerd、runc

172.31.38.147

k8s-worker1

2C4G

worker

kubelet、kube-proxy、Containerd、runc

172.31.85.248

ha1

1C2G

LB

haproxy、keepalived

172.31.92.50

ha2

1C2G

LB

haproxy、keepalived

172.31.92.8

/

/

VIP(虚拟IP)

软件版本

软件名

版本

备注

AWS EC2

kernel 版本:5.17 以上

这里用的 6.1.96-102.177.amzn2023.x86_64

kubernetes

v1.21.10

etcd

v3.5.2

calico

v3.19.4

coredns

v1.8.4

containerd

1.6.1

runc

1.1.0

haproxy

5.18

keepalived

3.5

网络划分

名称

网段

备注

Node 网络

10.254.0.0/16

Service 网络

10.96.0.0/16

Pode 网络

10.244.0.0/16

cordns

10.96.0.2

kubernetes svc

10.96.0.1

主机配置 配置免密登录

免密登录是为 k8s 部署的时候方便拷贝文件。不是必须项,可酌情配置

ssh-keygen ssh-copy-id root@k8s-master1 ssh-copy-id root@k8s-master2 ssh-copy-id root@k8s-master3 ssh-copy-id root@k8s-worker1 ssh root@k8s-master1

主机名设置 hostnamectl set-hostname xxx

主机与IP地址解析 cat >> /etc/hosts << EOF 192.168.10.10 ha1 192.168.10.11 ha2 10.254.16.184 k8s-master1 10.254.30.124 k8s-master2 10.254.18.125 k8s-master3 10.254.31.88 k8s-worker1 EOF for i in k8s-master2 k8s-master3;do scp /etc/hosts $i :/etc/;done for i in k8s-master2 k8s-master3;do ssh $i 'cat /etc/hosts' ;done

关闭selinux setenforce 0 sed -ri 's/SELINUX=.*/SELINUX=disabled/' /etc/selinux/config sestatus for i in k8s-master1 k8s-master2 k8s-master3 k8s-worker1;do ssh $i 'sed -ri ' s/SELINUX=.*/SELINUX=disabled/' /etc/selinux/config' ;done

交换分区设置 swapoff -a sed -ri 's/.*swap.*/#&/' /etc/fstab echo "vm.swappiness=0" >> /etc/sysctl.confsysctl -p

主机系统时间同步 limit优化 ulimit -SHn 65535cat <<EOF >> /etc/security/limits.conf * soft nofile 655360 * hard nofile 131072 * soft nproc 655350 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited EOF for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh "$node " "cat <<EOF >> /etc/security/limits.conf * soft nofile 655360 * hard nofile 131072 * soft nproc 655350 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited EOF" done

ipvs管理工具安装及模块加载 yum -y install ipvsadm ipset sysstat conntrack libseccomp iptables for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh "$node " "yum -y install ipvsadm ipset sysstat conntrack libseccomp" done modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh "$node " "modprobe -- ip_vs; modprobe -- ip_vs_rr; modprobe -- ip_vs_wrr; modprobe -- ip_vs_sh; modprobe -- nf_conntrack" done cat >/etc/modules-load.d/ipvs.conf <<EOF ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip EOF for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh "$node " "cat >/etc/modules-load.d/ipvs.conf <<EOF ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip EOF" done

加载containerd相关内核模块 modprobe overlay modprobe br_netfilter cat > /etc/modules-load.d/containerd.conf << EOF overlay br_netfilter EOF for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh "$node " "cat > /etc/modules-load.d/containerd.conf << EOF overlay br_netfilter EOF" done systemctl enable --now systemd-modules-load.service for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh "$node " "systemctl enable --now systemd-modules-load.service" done

Linux内核升级

因为 centos7 已经停止支持,所以这里使用 aws ec2 进行安装,下边是centos 7 的内核升级步骤,现在来看不一定好使

Linux内核优化 cat <<EOF > /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl =15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 131072 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF for node in k8s-master2 k8s-master3 k8s-worker1; do scp /etc/sysctl.d/k8s.conf $node :/etc/sysctl.d/ done sysctl --system for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh $node "sysctl --system" done

重启检查模块加载 reboot -h now lsmod | grep --color=auto -e ip_vs -e nf_conntrack for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh $node "lsmod | grep --color=auto -e ip_vs -e nf_conntrack" done lsmod | egrep 'br_netfilter |overlay' for node in k8s-master1 k8s-master2 k8s-master3 k8s-worker1; do ssh $node "lsmod | egrep 'br_netfilter |overlay'" done

其它工具安装 yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git lrzsz -y

负载均衡器准备

⚠️:因为 aws vpc 禁止 vrrp 协议传输,所以这里配置的 ha 组合不能正常运行,如果想正常运行可以借助 aws cli 命令将网卡灵活附加到两台 ha 节点上。如下的配置是在公有云之外的虚拟机中的配置,配置可用。

安装haproxy与keepalived

两台都要装

yum -y install haproxy keepalived

HAProxy配置

两台 haproxy 配置相同

cat >/etc/haproxy/haproxy.cfg<<"EOF" global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor frontend k8s-master bind 0.0.0.0:6443 bind 127.0.0.1:6443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master1 172.31.44.194:6443 check server k8s-master2 172.31.39.81:6443 check server k8s-master3 172.31.35.224:6443 check EOF

KeepAlived

keepalived 两台配置不同

cat >/etc/keepalived/keepalived.conf<<"EOF" ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface enX0 mcast_src_ip 172.31.85.248 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 172.31.92.8 } track_script { chk_apiserver } } EOF

cat >/etc/keepalived/keepalived.conf<<"EOF" ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface enX0 mcast_src_ip 172.31.92.50 virtual_router_id 51 priority 99 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 172.31.92.8 } track_script { chk_apiserver } } EOF

健康检查脚本 cat > /etc/keepalived/check_apiserver.sh <<"EOF" err=0 for k in $(seq 1 3) do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fi done if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi EOF

chmod +x /etc/keepalived/check_apiserver.sh

启动服务并验证 systemctl daemon-reload systemctl enable --now haproxy systemctl enable --now keepalived ip address show

创建工作目录、下载 cfssl 工具

在master1 上执行

sudo mkdir -p /data/k8s-workcd /data/k8s-worksudo wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64sudo wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64sudo wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64sudo chmod +x cfssl*sudo mv cfssl_linux-amd64 /usr/local/bin/cfsslsudo mv cfssljson_linux-amd64 /usr/local/bin/cfssljsonsudo mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfocfssl version Version: 1.2.0 Revision: dev Runtime: go1.6

说明:

cfssl,是CFSSL的命令行工具

cfssljson用来从cfssl程序获取JSON输出,并将证书,密钥,CSR和bundle写入文件中。

创建 ca 证书 配置ca证书请求文件 sudo tee ca-csr.json <<"EOF" { "CN" : "kubernetes" , "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "ST" : "Beijing" , "L" : "Beijing" , "O" : "kubemsb" , "OU" : "CN" } ], "ca" : { "expiry" : "87600h" } } EOF

创建ca证书 sudo cfssl gencert -initca ca-csr.json | sudo cfssljson -bare ca

配置ca证书策略 sudo tee ca-config.json <<"EOF" { "signing" : { "default" : { "expiry" : "87600h" }, "profiles" : { "kubernetes" : { "usages" : [ "signing" , "key encipherment" , "server auth" , "client auth" ], "expiry" : "87600h" } } } } EOF

创建etcd证书

在master1 上执行

配置etcd请求文件 sudo tee etcd-csr.json <<"EOF" { "CN" : "etcd" , "hosts" : [ "127.0.0.1" , "10.254.16.184" , "10.254.30.124" , "10.254.18.125" ], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [{ "C" : "CN" , "ST" : "Beijing" , "L" : "Beijing" , "O" : "kubemsb" , "OU" : "CN" }] } EOF

说明: hosts 为部署 etcd 的三台节点IP地址。

生成etcd证书 sudo cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | sudo cfssljson -bare etcd

输出 ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem etcd.csr etcd-csr.json etcd-key.pem etcd.pem

部署etcd集群

首先在一台节点执行,然后拷贝到其他etcd节点。

下载etcd软件包 https://github.com/etcd-io/etcd/releases/download/v3.5.2/etcd-v3.5.2-linux-amd64.tar.gz

sudo wget https://github.com/etcd-io/etcd/releases/download/v3.5.2/etcd-v3.5.2-linux-amd64.tar.gz

安装etcd软件 sudo tar -xvf etcd-v3.5.2-linux-amd64.tar.gzsudo cp -p etcd-v3.5.2-linux-amd64/etcd* /usr/local/bin/rm -rf etcd-v*

分发etcd软件 for i in k8s-master2 k8s-master3;do scp /usr/local/bin/etcd* $i :/usr/local/bin/;done

创建 etcd systemd unit 文件 sudo mkdir -p /var/lib/etcd chmod 700 /var/lib/etcd cat > /etc/systemd/system/etcd.service <<EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/local/bin/etcd \\ --name=etcd1 \\ --cert-file=/etc/etcd/ssl/etcd.pem \\ --key-file=/etc/etcd/ssl/etcd-key.pem \\ --peer-cert-file=/etc/etcd/ssl/etcd.pem \\ --peer-key-file=/etc/etcd/ssl/etcd-key.pem \\ --trusted-ca-file=/etc/kubernetes/ssl/ca.pem \\ --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \\ --initial-advertise-peer-urls=https://10.254.16.184:2380 \\ --listen-peer-urls=https://10.254.16.184:2380 \\ --listen-client-urls=https://10.254.16.184:2379,http://127.0.0.1:2379 \\ --advertise-client-urls=https://10.254.16.184:2379 \\ --initial-cluster-token=etcd-cluster-0 \\ --initial-cluster=etcd1=https://10.254.16.184:2380,etcd2=https://10.254.30.124:2380,etcd3=https://10.254.18.125:2380 \\ --initial-cluster-state=new \\ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

指定etcd的工作目录和数据目录为/var/lib/etcd,需要在启动服务前创建这个目录;

为了保证通信安全,需要指定etcd 的公私钥(cert-file和key-file)、Peers通信的公私钥和CA 证书(peer-cert-file、peer-key-file、peer-trusted-ca-file)、客户端的CA 证书(trusted-ca-file);

--initial-cluster-state值为new时,--name的参数值必须位于--initial-cluster列表中;

拷贝etcd、ca证书到目录 mkdir -p /etc/etcd/ssl/mkdir -p /etc/kubernetes/ssl/cp etcd*.pem /etc/etcd/ssl/cp ca*.pem /etc/kubernetes/ssl/

启动etcd 服务 sudo systemctl daemon-reloadsudo systemctl enable etcd --nowsudo systemctl status etcd

最先启动的etcd 进程会卡住一段时间,等待其他节点启动加入集群,在所有的etcd 节点重复上面的步骤,直到所有的机器etcd 服务都已经启动。

拷贝etcd配置到其他节点 for i in k8s-master2 k8s-master3;do ssh $i 'mkdir -p /etc/etcd/ssl/; mkdir -p /etc/kubernetes/ssl/; mkdir -p /var/lib/etcd/; chmod 700 /var/lib/etcd' ;done for i in k8s-master2 k8s-master3;do scp -r /etc/etcd/ssl/* $i :/etc/etcd/ssl/; scp -r /etc/kubernetes/ssl/* $i :/etc/kubernetes/ssl/ ; scp /etc/systemd/system/etcd.service $i :/etc/systemd/system/etcd.service ;done

其他两个节点都启动etcd服务 for i in k8s-master2 k8s-master3;do ssh $i 'systemctl daemon-reload; systemctl enable etcd --now' ;done for i in k8s-master1 k8s-master2 k8s-master3;do ssh $i 'systemctl restart etcd' ;done

验证 etcd 服务 for ip in 10.254.16.184 10.254.30.124 10.254.18.125; do ETCDCTL_API=3 /usr/local/bin/etcdctl \ --endpoints=https://${ip} :2379 \ --cacert=/etc/kubernetes/ssl/ca.pem \ --cert=/etc/etcd/ssl/etcd.pem \ --key=/etc/etcd/ssl/etcd-key.pem \ endpoint health; done

输出结果如下:

https://172.31.44.194:2379 is healthy: successfully committed proposal: took = 16.295348ms https://172.31.37.159:2379 is healthy: successfully committed proposal: took = 11.500724ms https://172.31.35.224:2379 is healthy: successfully committed proposal: took = 10.682674ms

ETCDCTL_API=3 /usr/local/bin/etcdctl \ --endpoints=https://10.254.25.93:2379,https://10.254.24.60:2379,https://10.254.23.214:2379 \ --cacert=/etc/kubernetes/ssl/ca.pem \ --cert=/etc/etcd/ssl/etcd.pem \ --key=/etc/etcd/ssl/etcd-key.pem \ --write-out=table \ endpoint health +----------------------------+--------+-------------+-------+ | ENDPOINT | HEALTH | TOOK | ERROR | +----------------------------+--------+-------------+-------+ | https://172.31.44.194:2379 | true | 13.342283ms | | | https://172.31.37.159:2379 | true | 13.832637ms | | | https://172.31.35.224:2379 | true | 13.85639ms | | +----------------------------+--------+-------------+-------+ ETCDCTL_API=3 /usr/local/bin/etcdctl \ --endpoints=https://10.254.25.93:2379,https://10.254.24.60:2379,https://10.254.23.214:2379 \ --cacert=/etc/kubernetes/ssl/ca.pem \ --cert=/etc/etcd/ssl/etcd.pem \ --key=/etc/etcd/ssl/etcd-key.pem \ --write-out=table \ check perf ETCDCTL_API=3 /usr/local/bin/etcdctl \ --endpoints=https://10.254.25.93:2379,https://10.254.24.60:2379,https://10.254.23.214:2379 \ --cacert=/etc/kubernetes/ssl/ca.pem \ --cert=/etc/etcd/ssl/etcd.pem \ --key=/etc/etcd/ssl/etcd-key.pem \ --write-out=table \ member list ETCDCTL_API=3 /usr/local/bin/etcdctl \ --endpoints=https://10.254.25.93:2379,https://10.254.24.60:2379,https://10.254.23.214:2379 \ --cacert=/etc/kubernetes/ssl/ca.pem \ --cert=/etc/etcd/ssl/etcd.pem \ --key=/etc/etcd/ssl/etcd-key.pem \ --write-out=table \ endpoint status

Kubernetes集群部署 Kubernetes软件包下载 wget https://dl.k8s.io/v1.21.10/kubernetes-server-linux-amd64.tar.gz

Kubernetes软件包安装 tar -xvf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bin/cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

Kubernetes软件分发 for i in k8s-master2 k8s-master3;do scp kube-apiserver kube-controller-manager kube-scheduler kubectl $i :/usr/local/bin/ done

cp kubelet kube-proxy /usr/local/binfor i in k8s-master2 k8s-master3 k8s-worker1;do scp kubelet kube-proxy $i :/usr/local/bin/ done

在集群节点上创建目录 mkdir -p /etc/kubernetes/ssl mkdir -p /var/log/kubernetes for i in k8s-master2 k8s-master3 k8s-worker1;do ssh $i 'mkdir -p /etc/kubernetes/ssl ;mkdir -p /var/log/kubernetes ' ; done

部署api-server

master 节点

创建apiserver证书请求文件 cd /data/k8s-work/cat > kube-apiserver-csr.json << "EOF" { "CN" : "kubernetes" , "hosts" : [ "127.0.0.1" , "10.254.16.184" , "10.254.30.124" , "10.254.18.125" , "10.96.0.1" , "kubernetes" , "kubernetes.default" , "kubernetes.default.svc" , "kubernetes.default.svc.cluster" , "kubernetes.default.svc.cluster.local" ], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "ST" : "Beijing" , "L" : "Beijing" , "O" : "kubemsb" , "OU" : "CN" } ] } EOF

生成apiserver证书及token文件并同步文件到master1目录 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

cat > token.csv << EOF $(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap" EOF cp token.csv /etc/kubernetes/token.csvcp kube-apiserver*.pem /etc/kubernetes/ssl/

创建apiserver服务配置文件 cat > /etc/kubernetes/kube-apiserver.conf << "EOF" KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --anonymous-auth=false \ --bind-address=10.254.16.184 \ --secure-port=6443 \ --advertise-address=10.254.16.184 \ --insecure-port=0 \ --authorization-mode=Node,RBAC \ --runtime-config=api/all=true \ --enable-bootstrap-token-auth \ --service-cluster-ip-range=10.96.0.0/16 \ --token-auth-file=/etc/kubernetes/token.csv \ --service-node-port-range=30000-32767 \ --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \ --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \ --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-issuer=api \ --etcd-cafile=/etc/kubernetes/ssl/ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --etcd-servers=https://10.254.16.184:2379,https://10.254.30.124:2379,https://10.254.18.125:2379 \ --enable-swagger-ui=true \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/log/kube-apiserver-audit.log \ --event-ttl=1h \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=4" EOF

创建 kube -api server 的systemd unit文件 cat > /etc/systemd/system/kube-apiserver.service << "EOF" [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=etcd.service Wants=etcd.service [Service] EnvironmentFile=-/etc/kubernetes/kube-apiserver.conf ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure RestartSec=5 Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

同步文件到集群所有master节点 for i in k8s-master3 k8s-master2;do scp /etc/kubernetes/token.csv $i :/etc/kubernetes; scp /etc/kubernetes/kube-apiserver.conf $i :/etc/kubernetes; scp /etc/kubernetes/ssl/kube-apiserver*.pem $i :/etc/kubernetes/ssl/; scp /etc/systemd/system/kube-apiserver.service $i :/etc/systemd/system/kube-apiserver.service done

启动 apiserver for i in k8s-master1 k8s-master3 k8s-master2;do ssh $i 'systemctl daemon-reload;systemctl enable --now kube-apiserver' done

检查 apiserver 启动情况 for i in k8s-master1 k8s-master3 k8s-master2;do ssh $i 'systemctl is-active kube-apiserver' done for i in k8s-master1 k8s-master3 k8s-master2;do curl --insecure https://$i :6443/ done

部署 kubectl 创建kubectl证书请求文件 cat > admin-csr.json << "EOF" { "CN" : "admin" , "hosts" : [], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "ST" : "Beijing" , "L" : "Beijing" , "O" : "system:masters" , "OU" : "system" } ] } EOF

说明: 后续 kube-apiserver 使用 RBAC 对客户端(如 kubelet、kube-proxy、Pod)请求进行授权; kube-apiserver 预定义了一些 RBAC 使用的 RoleBindings,如 cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予了调用kube-apiserver 的所有 API的权限; O指定该证书的 Group 为 system:masters,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的 system:masters,所以被授予访问所有 API 的权限; 注: 这个admin 证书,是将来生成管理员用的kubeconfig 配置文件用的,现在我们一般建议使用RBAC 来对kubernetes 进行角色权限控制, kubernetes 将证书中的CN 字段 作为User, O 字段作为 Group; "O" : "system:masters" , 必须是system:masters,否则后面kubectl create clusterrolebinding报错。

生成证书文件 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

复制文件到指定目录 cp admin*.pem /etc/kubernetes/ssl/for i in k8s-master2 k8s-master3;do scp admin*.pem $i :/etc/kubernetes/ssl/ done

生成kubeconfig配置文件

kube.config 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.254.16.184:6443 --kubeconfig=kube.config kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config kubectl config use-context kubernetes --kubeconfig=kube.config

准备kubectl配置文件并进行角色绑定 mkdir ~/.kubecp kube.config ~/.kube/configkubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes --kubeconfig=/root/.kube/config

查看集群状态 export KUBECONFIG=$HOME /.kube/configkubectl cluster-info kubectl get componentstatuses kubectl get all --all-namespaces

同步kubectl配置文件到集群其它master节点 for i in k8s-master2 k8s-master3;do ssh $i 'mkdir /root/.kube' done for i in k8s-master2 k8s-master3;do scp /root/.kube/config $i :/root/.kube/ done

配置kubectl命令补全 yum install -y bash-completion source /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)kubectl completion bash > ~/.kube/completion.bash.inc source '/root/.kube/completion.bash.inc' source $HOME /.bash_profile

部署kube-controller-manager 创建kube-controller-manager证书请求文件 cat > kube-controller-manager-csr.json << "EOF" { "CN" : "system:kube-controller-manager" , "key" : { "algo" : "rsa" , "size" : 2048 }, "hosts" : [ "127.0.0.1" , "10.254.16.184" , "10.254.30.124" , "10.254.18.125" ], "names" : [ { "C" : "CN" , "ST" : "Beijing" , "L" : "Beijing" , "O" : "system:kube-controller-manager" , "OU" : "system" } ] } EOF

说明: hosts 列表包含所有 kube-controller-manager 节点 IP; CN 为 system:kube-controller-manager; O 为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限

创建kube-controller-manager证书文件 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

创建kube-controller-manager的kube-controller-manager.kubeconfig kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.254.16.184:6443 --kubeconfig=kube-controller-manager.kubeconfig kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

创建kube-controller-manager配置文件 cat > kube-controller-manager.conf << "EOF" KUBE_CONTROLLER_MANAGER_OPTS="--port=10252 \ --secure-port=10257 \ --bind-address=127.0.0.1 \ --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \ --service-cluster-ip-range=10.96.0.0/16 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --allocate-node-cidrs=true \ --cluster-cidr=10.244.0.0/16 \ --experimental-cluster-signing-duration=87600h \ --root-ca-file=/etc/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \ --leader-elect=true \ --feature-gates=RotateKubeletServerCertificate=true \ --controllers=*,bootstrapsigner,tokencleaner \ --horizontal-pod-autoscaler-use-rest-clients=true \ --horizontal-pod-autoscaler-sync-period=10s \ --tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \ --tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \ --use-service-account-credentials=true \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2" EOF

创建服务启动文件 cat > kube-controller-manager.service << "EOF" [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/kube-controller-manager.conf ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

同步文件到集群master节点 cp kube-controller-manager*.pem /etc/kubernetes/ssl/cp kube-controller-manager.kubeconfig /etc/kubernetes/cp kube-controller-manager.conf /etc/kubernetes/cp kube-controller-manager.service /usr/lib/systemd/system/

for i in k8s-master2 k8s-master3;do scp kube-controller-manager*.pem $i :/etc/kubernetes/ssl/; scp kube-controller-manager.kubeconfig $i :/etc/kubernetes/ scp kube-controller-manager.conf $i :/etc/kubernetes/ scp kube-controller-manager.service $i :/usr/lib/systemd/system/ done

openssl x509 -in /etc/kubernetes/ssl/kube-controller-manager.pem -noout -text

启动服务 systemctl daemon-reload systemctl enable --now kube-controller-manager systemctl status kube-controller-manager

for i in k8s-master2 k8s-master3;do ssh $i 'systemctl daemon-reload; systemctl enable --now kube-controller-manager; systemctl is-active kube-controller-manager' done

部署kube-scheduler 创建kube-scheduler证书请求文件 cat > kube-scheduler-csr.json << "EOF" { "CN" : "system:kube-scheduler" , "hosts" : [ "127.0.0.1" , "10.254.16.184" , "10.254.30.124" , "10.254.18.125" ], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "ST" : "Beijing" , "L" : "Beijing" , "O" : "system:kube-scheduler" , "OU" : "system" } ] } EOF

生成kube-scheduler证书 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

创建kube-scheduler的kubeconfig kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.254.16.184:6443 --kubeconfig=kube-scheduler.kubeconfig kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

创建服务配置文件 cat > kube-scheduler.conf << "EOF" KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \ --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \ --leader-elect=true \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2" EOF

创建服务启动配置文件 cat > kube-scheduler.service << "EOF" [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

同步文件至集群master节点 cp kube-scheduler*.pem /etc/kubernetes/ssl/cp kube-scheduler.kubeconfig /etc/kubernetes/cp kube-scheduler.conf /etc/kubernetes/cp kube-scheduler.service /usr/lib/systemd/system/

for i in k8s-master2 k8s-master3;do scp kube-scheduler*.pem $i :/etc/kubernetes/ssl/ scp kube-scheduler*.pem $i :/etc/kubernetes/ssl/ scp kube-scheduler.kubeconfig kube-scheduler.conf $i :/etc/kubernetes/ scp kube-scheduler.kubeconfig kube-scheduler.conf $i :/etc/kubernetes/ scp kube-scheduler.service $i :/usr/lib/systemd/system/ scp kube-scheduler.service $i :/usr/lib/systemd/system/ done

启动服务 systemctl daemon-reload systemctl enable --now kube-scheduler systemctl is-active kube-scheduler

for i in k8s-master2 k8s-master3;do ssh $i 'systemctl daemon-reload; systemctl enable --now kube-scheduler; systemctl is-active kube-scheduler' done

工作节点(worker node)部署 获取软件包 wget https://github.com/containerd/containerd/releases/download/v1.6.1/cri-containerd-cni-1.6.1-linux-amd64.tar.gz tar -xf cri-containerd-cni-1.6.1-linux-amd64.tar.gz -C /

生成配置文件并修改 mkdir /etc/containerdcontainerd config default >/etc/containerd/config.toml config.toml sed -i 's@systemd_cgroup = false@systemd_cgroup = true@' /etc/containerd/config.toml sed -i 's@k8s.gcr.io/pause:3.6@registry.aliyuncs.com/google_containers/pause:3.6@' /etc/containerd/config.toml

cat >/etc/containerd/config.toml<<EOF root = "/var/lib/containerd" state = "/run/containerd" oom_score = -999 [grpc] address = "/run/containerd/containerd.sock" uid = 0 gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 [debug] address = "" uid = 0 gid = 0 level = "" [metrics] address = "" grpc_histogram = false [cgroup] path = "" [plugins] [plugins.cgroups] no_prometheus = false [plugins.cri] stream_server_address = "127.0.0.1" stream_server_port = "0" enable_selinux = false sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6" stats_collect_period = 10 systemd_cgroup = true enable_tls_streaming = false max_container_log_line_size = 16384 [plugins.cri.containerd] snapshotter = "overlayfs" no_pivot = false [plugins.cri.containerd.default_runtime] runtime_type = "io.containerd.runtime.v1.linux" runtime_engine = "" runtime_root = "" [plugins.cri.containerd.untrusted_workload_runtime] runtime_type = "" runtime_engine = "" runtime_root = "" [plugins.cri.cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "/etc/cni/net.d/10-default.conf" [plugins.cri.registry] [plugins.cri.registry.mirrors] [plugins.cri.registry.mirrors."docker.io"] endpoint = [ "https://docker.mirrors.ustc.edu.cn", "http://hub-mirror.c.163.com" ] [plugins.cri.registry.mirrors."gcr.io"] endpoint = [ "https://gcr.mirrors.ustc.edu.cn" ] [plugins.cri.registry.mirrors."k8s.gcr.io"] endpoint = [ "https://gcr.mirrors.ustc.edu.cn/google-containers/" ] [plugins.cri.registry.mirrors."quay.io"] endpoint = [ "https://quay.mirrors.ustc.edu.cn" ] [plugins.cri.registry.mirrors."harbor.kubemsb.com"] endpoint = [ "http://harbor.kubemsb.com" ] [plugins.cri.x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins.diff-service] default = ["walking"] [plugins.linux] shim = "containerd-shim" runtime = "runc" runtime_root = "" no_shim = false shim_debug = false [plugins.opt] path = "/opt/containerd" [plugins.restart] interval = "10s" [plugins.scheduler] pause_threshold = 0.02 deletion_threshold = 0 mutation_threshold = 100 schedule_delay = "0s" startup_delay = "100ms" EOF

安装runc

由于上述软件包中包含的runc对系统依赖过多,所以建议单独下载安装。

默认runc执行时提示:runc: symbol lookup error: runc: undefined symbol: seccomp_notify_respond

wget https://github.com/opencontainers/runc/releases/download/v1.1.0/runc.amd64 chmod +x runc.amd64mv runc.amd64 /usr/local/sbin/runcrunc version 1.1.0 commit: v1.1.0-0-g067aaf85 spec: 1.0.2-dev go: go1.17.6 libseccomp: 2.5.3 systemctl enable containerd systemctl start containerd

注意:工作节点的以上部署步骤需要同步到其他工作节点上。

部署kubelet

在k8s-master1上操作

创建kubelet-bootstrap.kubeconfig BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv) kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.254.16.184:6443 --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl describe clusterrolebinding cluster-system-anonymous kubectl describe clusterrolebinding kubelet-bootstrap

创建kubelet配置文件 cat > kubelet.json << "EOF" { "kind" : "KubeletConfiguration" , "apiVersion" : "kubelet.config.k8s.io/v1beta1" , "authentication" : { "x509" : { "clientCAFile" : "/etc/kubernetes/ssl/ca.pem" }, "webhook" : { "enabled" : true , "cacheTTL" : "2m0s" }, "anonymous" : { "enabled" : false } }, "authorization" : { "mode" : "Webhook" , "webhook" : { "cacheAuthorizedTTL" : "5m0s" , "cacheUnauthorizedTTL" : "30s" } }, "address" : "10.254.16.184" , "port" : 10250, "readOnlyPort" : 10255, "cgroupDriver" : "systemd" , "hairpinMode" : "promiscuous-bridge" , "serializeImagePulls" : false , "clusterDomain" : "cluster.local." , "clusterDNS" : ["10.96.0.2" ] } EOF

创建kubelet服务启动管理文件 cat > kubelet.service << "EOF" [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/kubernetes/kubernetes After=containerd.service Requires=containerd.service [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/usr/local/bin/kubelet \ --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \ --cert-dir=/etc/kubernetes/ssl \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --config=/etc/kubernetes/kubelet.json \ --cni-bin-dir=/opt/cni/bin \ --cni-conf-dir=/etc/cni/net.d \ --container-runtime=remote \ --container-runtime-endpoint=unix:///run/containerd/containerd.sock \ --network-plugin=cni \ --rotate-certificates \ --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.2 \ --root-dir=/etc/cni/net.d \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

同步文件到集群节点 cp kubelet-bootstrap.kubeconfig /etc/kubernetes/cp kubelet.json /etc/kubernetes/cp kubelet.service /usr/lib/systemd/system/

for i in k8s-master2 k8s-master3 k8s-worker1;do scp kubelet-bootstrap.kubeconfig kubelet.json $i :/etc/kubernetes/;done for i in k8s-master2 k8s-master3 k8s-worker1;do scp ca.pem $i :/etc/kubernetes/ssl/;done for i in k8s-master2 k8s-master3 k8s-worker1;do scp kubelet.service $i :/usr/lib/systemd/system/;done

创建目录及启动服务 mkdir -p /var/lib/kubeletmkdir -p /var/log/kubernetesfor i in k8s-master2 k8s-master3 k8s-worker1;do ssh $i 'mkdir -p /var/lib/kubelet; mkdir -p /var/log/kubernetes' done

systemctl daemon-reload systemctl enable --now kubelet systemctl is-active kubelet for i in k8s-master2 k8s-master3 k8s-worker1;do ssh $i 'systemctl daemon-reload; systemctl enable --now kubelet; systemctl is-active kubelet' done

[root@ip-10-254-16-184 k8s-work]# kubectl get nodes NAME STATUS ROLES AGE VERSION ip-10-254-16-184.ap-east-1.compute.internal Ready <none> 3m2s v1.21.10 [root@ip-10-254-16-184 k8s-work]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION csr-b2z2g 3m34s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

部署kube-proxy 创建kube-proxy证书请求文件 cat > kube-proxy-csr.json << "EOF" { "CN" : "system:kube-proxy" , "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "ST" : "Beijing" , "L" : "Beijing" , "O" : "kubemsb" , "OU" : "CN" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

创建kubeconfig文件 kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.254.16.184:6443 --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

创建服务配置文件 cat > kube-proxy.yaml << "EOF" apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 10.254.16.184 clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig clusterCIDR: 10.244.0.0/16 healthzBindAddress: 10.254.16.184:10256 kind: KubeProxyConfiguration metricsBindAddress: 10.254.16.184:10249 mode: "ipvs" EOF

创建服务启动管理文件 cat > kube-proxy.service << "EOF" [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] WorkingDirectory=/var/lib/kube-proxy ExecStart=/usr/local/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy.yaml \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

同步文件到集群工作节点主机 cp kube-proxy*.pem /etc/kubernetes/ssl/cp kube-proxy.kubeconfig kube-proxy.yaml /etc/kubernetes/cp kube-proxy.service /usr/lib/systemd/system/for i in k8s-master2 k8s-master3 k8s-worker1;do scp kube-proxy.kubeconfig kube-proxy.yaml $i :/etc/kubernetes/;done for i in k8s-master2 k8s-master3 k8s-worker1;do scp kube-proxy.service $i :/usr/lib/systemd/system/;done

服务启动 mkdir -p /var/lib/kube-proxysystemctl daemon-reload systemctl enable --now kube-proxy systemctl status kube-proxy

网络组件部署 Calico wget https://docs.projectcalico.org/v3.19/manifests/calico.yaml 3683 - name: CALICO_IPV4POOL_CIDR 3684 value: "10.244.0.0/16" kubectl apply -f calico.yaml kubectl get pods -A yum install iptables sudo mkdir /sys/fs/cgroup/systemdsudo mount -t cgroup -o none,name=systemd cgroup /sys/fs/cgroup/systemd

部署CoreDNS cat > coredns.yaml << "EOF" apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" spec: strategy: type : RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns" ] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.8.4 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf" , "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.96.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP EOF

kubectl apply -f coredns.yaml

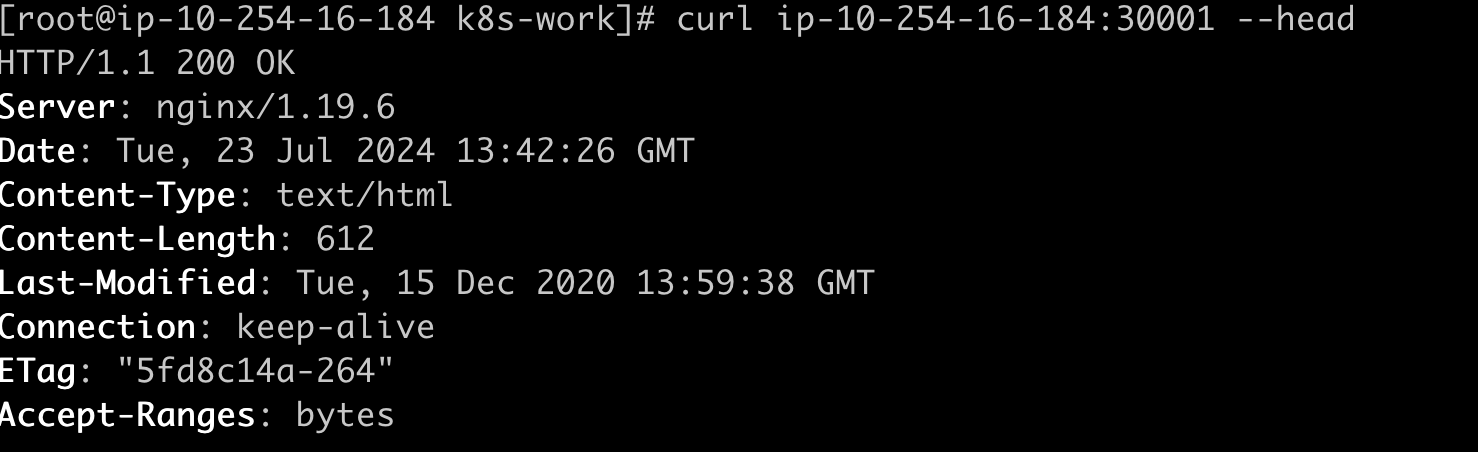

部署应用验证 cat > nginx.yaml << "EOF" --- apiVersion: v1 kind: ReplicationController metadata: name: nginx-web spec: replicas: 2 selector: name: nginx template: metadata: labels: name: nginx spec: containers: - name: nginx image: nginx:1.19.6 ports: - containerPort: 80 --- apiVersion: v1 kind: Service metadata: name: nginx-service-nodeport spec: ports: - port: 80 targetPort: 80 nodePort: 30001 protocol: TCP type : NodePort selector: name: nginx EOF

kubectl apply -f nginx.yaml

[root@ip-10-254-16-184 k8s-work]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-8548df5d4c-rr6qz 1/1 Running 0 3m33s pod/nginx-web-mkg64 1/1 Running 0 2m41s pod/nginx-web-v49n6 1/1 Running 0 2m41s NAME DESIRED CURRENT READY AGE replicationcontroller/nginx-web 2 2 2 2m41s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 57m service/nginx-service-nodeport NodePort 10.96.107.186 <none> 80:30001/TCP 2m41s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx 1/1 1 1 3m33s NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-8548df5d4c 1 1 1 3m33s